As long as the procedure generating the event conforms to the random variable model under a Binomial distribution the calculator applies. as 0.5 or 1/2, 1/6 and so on), the number of trials and the number of events you want the probability calculated for.

#CALCULATOR F DISTRIBUTION TRIAL#

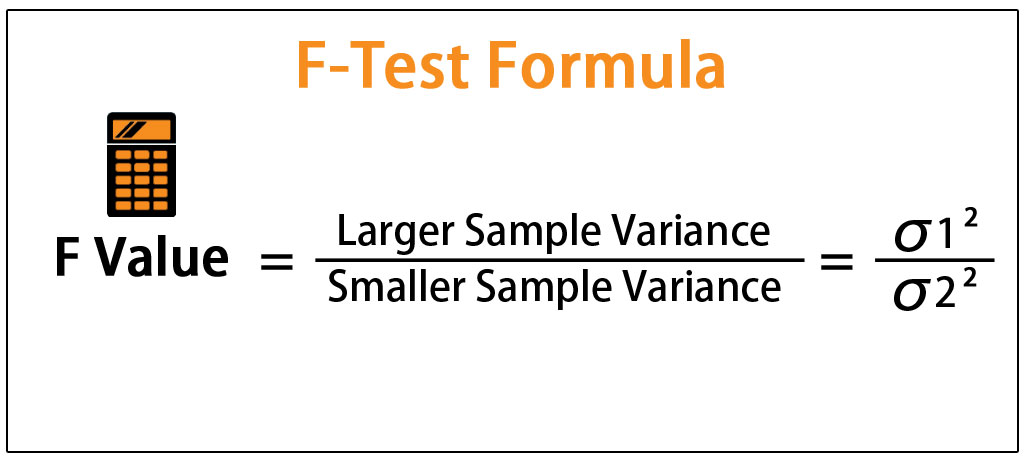

Simply enter the probability of observing an event (outcome of interest, success) on a single trial (e.g. You can use this tool to solve either for the exact probability of observing exactly x events in n trials, or the cumulative probability of observing X ≤ x, or the cumulative probabilities of observing X x. Using the Binomial Probability Calculator Binomial Cumulative Distribution Function (CDF).Using the Binomial Probability Calculator.You may notice that the F-test of an overall significance is a particular form of the F-test for comparing two nested models: it tests whether our model does significantly better than the model with no predictors (i.e., the intercept-only model). The test statistic follows the F-distribution with (k 2 - k 1, n - k 2)-degrees of freedom, where k 1 and k 2 are the number of variables in the smaller and bigger models, respectively, and n is the sample size. With the presence of the linear relationship having been established in your data sample with the above test, you can calculate the coefficient of determination, R², which indicates the strength of this relationship.Ī test to compare two nested regression models. The test statistic has an F-distribution with (k - 1, n - k)-degrees of freedom, where n is the sample size, and k is the number of variables (including the intercept). We arrive at the F-distribution with (k - 1, n - k)-degrees of freedom, where k is the number of groups, and n is the total sample size (in all groups together).Ī test for overall significance of regression analysis. Its test statistic follows the F-distribution with (n - 1, m - 1)-degrees of freedom, where n and m are the respective sample sizes.ĪNOVA is used to test the equality of means in three or more groups that come from normally distributed populations with equal variances. All of them are right-tailed tests.Ī test for the equality of variances in two normally distributed populations.

Two-tailed test: p-value = 2 * min we denote the smaller of the numbers a and b.)īelow we list the most important tests that produce F-scores. Right-tailed test: p-value = Pr(S ≥ x | H 0) Left-tailed test: p-value = Pr(S ≤ x | H 0) In formulas below, S stands for a test statistic, x for the value it produced for a given sample, and Pr(event | H 0) is the probability of an event, calculated under the assumption that H 0 is true:

It is the alternative hypothesis which determines what "extreme" actually means, so the p-value depends on the alternative hypothesis that you state: left-tailed, right-tailed, or two-tailed. More intuitively, p-value answers the question:Īssuming that I live in a world where the null hypothesis holds, how probable is it that, for another sample, the test I'm performing will generate a value at least as extreme as the one I observed for the sample I already have? It is crucial to remember that this probability is calculated under the assumption that the null hypothesis is true! Formally, the p-value is the probability that the test statistic will produce values at least as extreme as the value it produced for your sample.

0 kommentar(er)

0 kommentar(er)